RoboNinja: Learning an Adaptive Cutting Policy for Multi-Material Objects

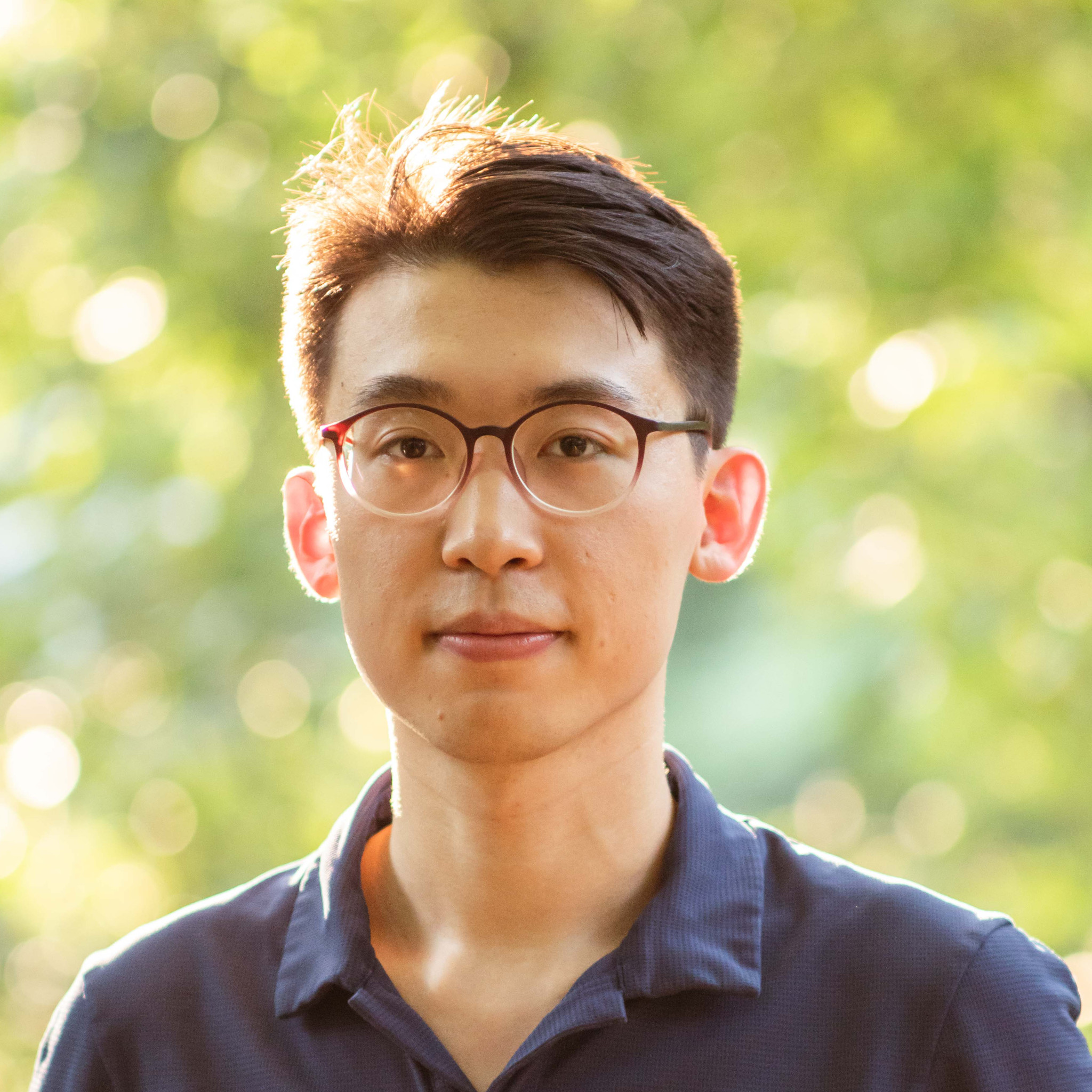

We introduce RoboNinja, a learning-based cutting system for multi-material objects (i.e., soft objects with rigid cores such as avocados or mangos). In contrast to prior works using open-loop cutting actions to cut through single-material objects (e.g., slicing a cucumber), RoboNinja aims to remove the soft part of an object while preserving the rigid core, thereby maximizing the yield. To achieve this, our system closes the perception-action loop by utilizing an interactive state estimator and an adaptive cutting policy. The system first employs sparse collision information to iteratively estimate the position and geometry of an object's core and then generates closed-loop cutting actions based on the estimated state and a tolerance value. The "adaptiveness" of the policy is achieved through the tolerance value, which modulates the policy's conservativeness when encountering collisions, maintaining an adaptive safety distance from the estimated core. Learning such cutting skills directly on a real-world robot is challenging. Yet, existing simulators are limited in simulating multi-material objects or computing the energy consumption during the cutting process. To address this issue, we develop a differentiable cutting simulator that supports multi-material coupling and allows for the generation of optimized trajectories as demonstrations for policy learning. Furthermore, by using a low-cost force sensor to capture collision feedback, we were able to successfully deploy the learned model in real-world scenarios, including objects with diverse core geometries and soft materials.

Paper

Latest version: arXiv

Robotics: Science and Systems (RSS) 2023

Team

1 Columbia University 2 CMU 3 UC Berkeley 4 UC San Diego 5 UMass Amherst & MIT-IBM Lab

BibTeX

@inproceedings{xu2023roboninja,

title={RoboNinja: Learning an Adaptive Cutting Policy for Multi-Material Objects},

author={Xu, Zhenjia and Xian, Zhou and Lin, Xingyu and Chi, Cheng and Huang, Zhiao and Gan, Chuang and Song, Shuran},

booktitle={Proceedings of Robotics: Science and Systems (RSS)},

year={2023}

}Technical Summary Video (with audio)

Real-world Evaluaiton

(1) Avocado

The robot first lowers the knife to cut into the avocado. It then encounters a collision with the core inside. The robot then retracts its state to a few steps earlier. Meanwhile, the collision signal is used for an updated estimation of the core state. The robot then continues the cutting process based on the updated state estimation. After a few collisions, our system is able to iteratively update the estimation of the core and generates a physically plausible cutting trajectory to cut off most of the flesh of the avocado. To better resemble real-world scenarios, we execute the same policy multiple times with different initial rotation angles to cut off more soft flesh from different directions.

(2) Mango

To effectively cut through fiber-rich material, such as mango skin, we add an additional horizontal, repetitively back-and-forth slicing primitive, in addition to our vertical cutting trajectory.

(3) More Fruits

Note that in practice, the knife may exhibit visible deformations and the object pose may also be changed during cutting, which could disrupt the accuracy of state estimation due to misleading collision positions. However, our cutting policy is robust enough to complete the task even with inaccurate estimations of the core.

(4) Bone-in-meat (oxtail)

In order to resemble real-world situations more realistically, we employ a bimanual setup, where one arm with a parallel-jaw gripper (WSG50) holds the bone and the other arm performs the cutting action.

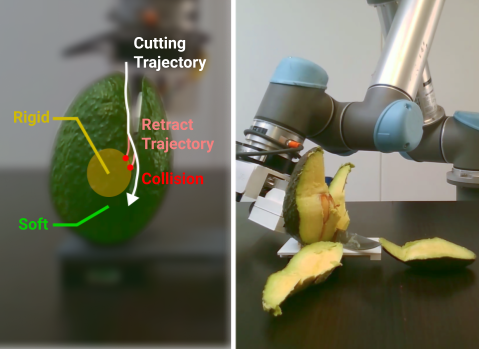

Real-world Setup

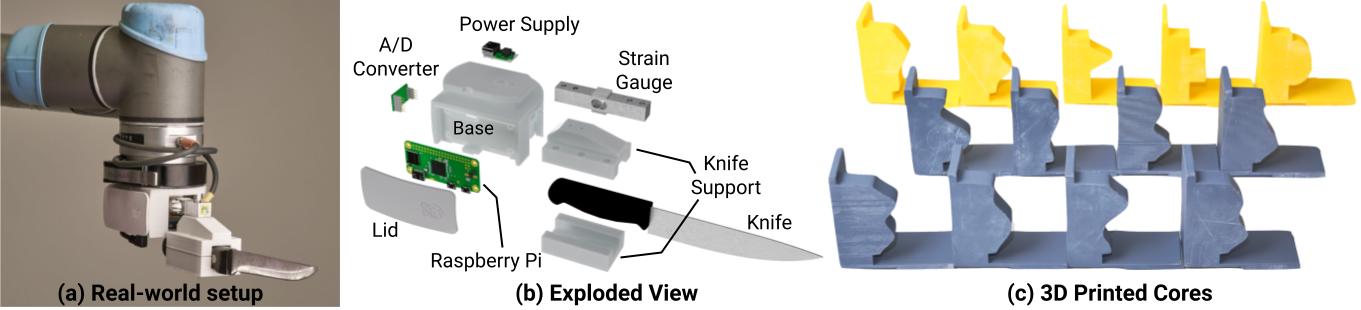

We design and construct a compact cutting tool equipped with a force sensor. The force is measured by a strain gauge as an analog electrical signal. The signal is then converted to a digital signal and transmitted to the robot controller through an A/D converter and a Raspberry Pi Zero, respectively. We also 3D print 8 in-distribution and 5 out-of-distribution geometries from the test set for evaluaiton. As for the soft material, we use Kinect Sand as a proxy because of its stable physics property.

Evaluaiton on 3D Printed Cores and Kinetic Sand

(1) In-distribution Geometries

(2) Novel Geometries

Our simulation-trained policy demonstrates strong generalization capabilities, effectively handling both in-distribution and out-of-distribution cores in a real-world setting. With only a few collisions, it is able to accurately estimate the core geometry and cut off the majority of the sand with a smooth cutting trajectory.

Comparision in Simulation

In the 2D view (bottom), the ground truth geometry is shown in gray, and the estimation is shown in brown. In both views, the forward trajectory of the knife is demonstrated in blue, and the retraction trajectories due to collision are visualized in red. The cutting trajectory of [RL] is very jittering and becomes too conservative after a few collisions. [Greedy] strictly follows the contour of the estimated geometry, leading to exceeding energy consumption during abrupt rotations. Both [NN] and [Non-Adaptive] get stuck can’t complete the cutting task within 10 collisions. [RoboNinja] is able to iteratively update the estimate of the core after each collision and adaptively adjust the cutting trajectory with optimized energy consumption.

Acknowledgements

In the 2D view (bottom), the ground truth geometry is shown in gray, and the estimation is shown in brown. In both views, the forward trajectory of the knife is demonstrated in blue, and the retraction trajectories due to collision are visualized in red. The cutting trajectory of [RL] is very jittering and becomes too conservative after a few collisions. [Greedy] strictly follows the contour of the estimated geometry, leading to exceeding energy consumption during abrupt rotations. Both [NN] and [Non-Adaptive] get stuck can’t complete the cutting task within 10 collisions. [RoboNinja] is able to iteratively update the estimate of the core after each collision and adaptively adjust the cutting trajectory with optimized energy consumption.

We would like to thank Huy Ha, Zeyi Liu, and Mandi Zhao for their helpful feedback and fruitful discussions. This work was supported in part by NSF Awards 2037101, 2132519, 2037101, and Toyota Research Institute. Dr. Gan was supported by the DARPA MCS program and gift funding from MERL, Cisco, and Amazon. We would like to thank Google for the UR5 robot hardware. The views and conclusions contained herein are those of the authors and should not be interpreted as necessarily representing the official policies, either expressed or implied, of the sponsors.

Contact

If you have any questions, please feel free to contact Zhenjia Xu.